New eLetter in Science questions Meta-funded study suggesting its news-feed algorithms are not major drivers of misinformation

Posted 27 September, 2024

(opens in a new window)A new eLetter has called into question the conclusion of(opens in a new window) a widely reported study, funded by Meta, that suggested Facebook and Instagram algorithms are not major drivers of misinformation based on a claim that the platforms successfully filtered out untrustworthy news surrounding the 2020 election.

The work, (opens in a new window)published in the journal Science, by a team of researchers lead by (opens in a new window)Przemek Grabowicz, an Assistant Professor at the University College Dublin, shows the(opens in a new window) Meta-funded research was conducted during a short period when Meta temporarily introduced a new, more rigorous news algorithm rather than its standard one, and that the researchers did not account for the algorithmic change.

This helped to create the misperception, widely reported by the media, that Facebook and Instagram news feeds were largely reliable sources of trustworthy news.

“The first thing that rang alarm bells for us,” said lead author(opens in a new window) Chhandak Bagchi, a graduate student in the Manning College of Information and Computer Science at UMass Amherst, “was when we realised that the previous researchers… conducted a randomised control experiment during the same time that Facebook had made a systemic, short-term change to their news algorithm.”

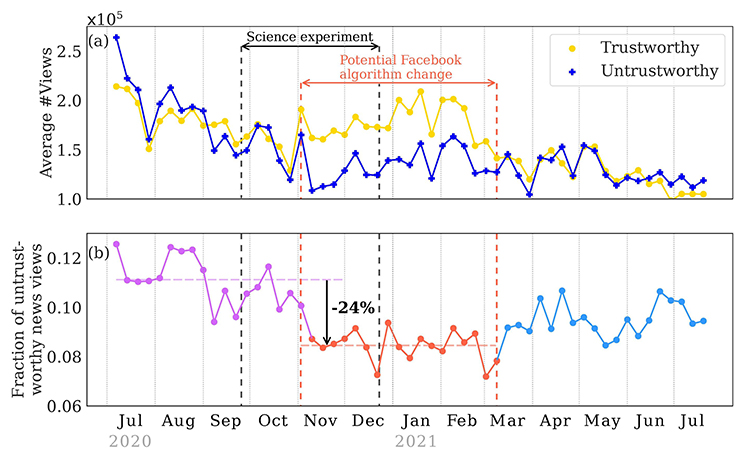

Beginning around the start of November 2020, Meta introduced 63 “break glass” changes to Facebook’s news feed which were expressly designed to diminish the visibility of untrustworthy news surrounding the 2020 US presidential election. These changes were successful.

“We applaud Facebook for implementing the more stringent news feed algorithm,” said Dr Grabowicz, the paper’s senior author, who recently joined University College Dublin but conducted this research at UMass Amherst’s Manning College of Information and Computer Science.

Bagchi, Grabowicz and their co-authors point out that the newer algorithm cut user views of misinformation by at least 24%. However, the changes were temporary, and the news algorithm reverted to its previous practice of promoting a higher fraction of untrustworthy news in March 2021.

The(opens in a new window) Meta funded study ran from September 24 through December 23, and substantially overlapped with the short window when Facebook’s news was determined by the more stringent algorithm. However, the paper did not clarify that the data captured an exceptional moment for the social media platform.

“Their paper gives the impression that the standard Facebook algorithm is good at stopping misinformation, which is questionable,” said Dr Grabowicz.

Part of the problem, as Bagchi, Grabowicz, and their co-authors write, is that studies such as the one funded by Meta have to be “pre registered” - meaning companies could know well ahead of time what researchers are looking for.

And yet, social media platforms are not required to make any public notification of significant changes to their algorithms.

“This can lead to situations where social media companies could conceivably change their algorithms to improve their public image if they know they are being studied,” write the authors, which include Jennifer Lundquist (professor of sociology at UMass Amherst), Monideepa Tarafdar (Charles J. Dockendorff Endowed Professor at UMass Amherst’s Isenberg School of Management), Anthony Paik (professor of sociology at UMass Amherst) and Filippo Menczer (Luddy Distinguished Professor of Informatics and Computer Science at Indiana University).

Though Meta funded and supplied 12 co-authors for(opens in a new window) the 2023 study, it asserts that “Meta did not have the right to pre publication approval”.

“Our results show that social media companies can mitigate the spread of misinformation by modifying their algorithms but may not have financial incentives to do so,” said Professor Paik.

“A key question is whether the harm of misinformation to individuals, the public and democracy should be more central in their business decisions.”

The Science eLetter of (opens in a new window)Bagchi et al. concludes that “the Digital Services Act in the European Union [...] could empower researchers to conduct independent audits of social media platforms and better understand the potentially serious effects of ever-changing social media algorithms on the public.”

This is one of the reasons, says Dr Grabowicz, why he joined University College Dublin.

By: David Kearns, Digital Journalist / Media Officer, UCD University Relations

To contact the UCD News & Content Team, email: newsdesk@ucd.ie