Assessment Integrity in the Era of Large Language Models: Threats and Opportunities within the UCD College of Engineering & Architecture

Overview

This Learning Enhancement project has been funded through SATLE (Strategic Alignment of Teaching and Learning Enhancement) with the support of the National Forum / HEA.

| PROJECT TITLE: | Assessment Integrity in the Era of Large Language Models: Threats and Opportunities within the UCD College of Engineering & Architecture |

|---|---|

| PROJECT COORDINATOR: | Dr Paul Cuffe, School of Electrical & Electronic Engineering |

| COLLABORATORS: | Dr Emer Doheny, Dr John Healy, Dr Kevin Nolan, Mr Aness al Qawlaq, UCD ElecSoc, Mr Abdulaziz Alharmoodi, UCD ElecSoc |

| TARGET AUDIENCE: | UCD staff & students The international pedagogy research community Leadership of UCD College of Engineering & Architecture |

Background

Large language models, particularly since ChatGPT's release in December 2022, have created an urgent need to examine assessment practices in higher education. These AI tools have demonstrated remarkable, and escalating, capabilities in generating text, debugging code, and solving technical problems - precisely the kinds of tasks traditionally used in university assessment.

This project recognised that LLMs posed particular challenges for engineering education. Unlike earlier tools, these models could produce computer scripts, circuit designs, and handle technical data, directly threatening many traditional forms of assessment within engineering disciplines.

Rather than taking a purely defensive stance, the project acknowledged dual imperatives: protecting academic integrity while preparing students for a professional world where AI tools would be commonplace. The College of Engineering & Architecture, with over 850 modules across six Schools, provided an ideal scope for examining these impacts across different technical disciplines.

The project structure engaged multiple stakeholders, including faculty from different engineering disciplines and student representatives through UCD ElecSoc, recognising that effective solutions would need to incorporate diverse perspectives and experiences.

Goals

The first aim was to identify urgent threats to assessment integrity within the College posed by LLM tools. This was to be achieved through a comprehensive audit of modules, creating a "traffic-light" classification of their vulnerability to AI-enabled cheating. This systematic review would cover over 850 modules across six engineering Schools.

The second aim was to develop creative solutions for "LLM-proofing" modules while simultaneously teaching students to use these tools appropriately. Rather than simply trying to prevent AI use, the project sought ways to integrate these tools constructively into engineering education.

These aims were structured into three specific work packages:

- A threat analysis auditing modules across the College

- An opportunity analysis through a student hackathon competition

- A pilot rollout of LLM-aware teaching and assessment approaches

The intended outcome was to help the College rapidly adjust its assessment strategies while maintaining educational quality and preparing students for an AI-augmented professional environment.

Approach

The project adopted a three-phase approach based on evidence gathering, student engagement, and practical implementation:

First, a comprehensive audit was conducted across the College's modules with the help of research assistants recruited from senior engineering students. This audit created a "traffic-light" classification system identifying modules' vulnerability to AI-enabled academic dishonesty.

Student partnership was centrally embedded through collaboration with UCD ElecSoc to run the "AI for Learning Hackathon 2023." This competition offered substantial prizes (€4,500 total) to encourage students to develop creative solutions for integrating AI tools into engineering education. The format included a kick-off event with refreshments, followed by team formation and a final pitch competition where teams presented their proposals.

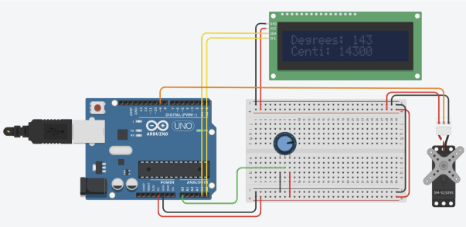

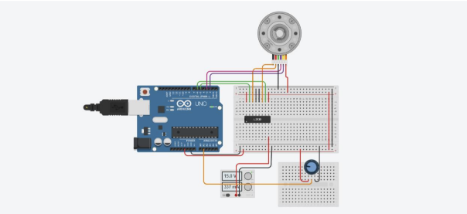

The final phase involved piloting new LLM-aware teaching approaches in selected modules. This included practical innovations like implementing browser-based Arduino programming simulations with built-in mechanisms to protect assessment integrity while embracing new technologies.

Throughout, student involvement was maintained through ElecSoc representatives on the project team who helped coordinate workshops and dissemination activities beyond the competition itself.

Results

The first deliverable was a College-wide assessment audit, providing traffic-light classifications of module vulnerability to AI-enabled cheating and shared with relevant School leadership. This covered over 850 modules across six engineering schools, creating an actionable database to inform teaching adaptations.

The second key outcome was the successful AI for Learning Hackathon, which engaged students in developing solutions for AI-era assessment. The competition's substantial prize fund (€3,000 first prize, €1,000 second prize, €500 third prize) encouraged meaningful participation and generated implementable proposals.

The project's scholarly impact is evidenced by four IEEE conference papers and two case studies, including:

- A threat assessment framework presented at IEEE RTSI 2024

- Three papers at IEEE ITHET 2024 examining applications in circuit design, power engineering, and doctoral education.

- Published case studies on AI-resistant in-class Arduino programming exercise (EEEN10020) and the replacement of an essay assignment with an interactive poster session (MEEN30130)

Resources

- Y. Mormul, J. Przybyszewski, A. Nakoud and P. Cuffe, “Reliance on Artificial Intelligence Tools May Displace Research Skills Acquisition Within Engineering Doctoral Programmes: Examples and Implications”, presented at IEEE International Conference on IT in Higher Education and Training, Paris, France, November 2024

- A. Hickey, C. O’Faolain and P. Cuffe, “Large Language Models in Power Engineering Education: A Case Study on Solving Optimal Dispatch Coursework Problems”, presented at IEEE International Conference on IT in Higher Education and Training, Paris, France, November 2024

- Y. Mormul, J. Przybyszewski, T. Siriburanon, J. Healy and P. Cuffe, “Gauging the Capability of Artificial Intelligence Chatbot Tools to Answer Textbook Coursework Exercises in Circuit Design Education”, presented at IEEE International Conference on IT in Higher Education and Training, Paris, France, November 2024

- A. Hickey, C. O’Faolain, J. Healy, K. Nolan, E. Doheny and P. Cuffe, “A Threat Assessment Framework for Screening the Integrity of University Assessments in the Era of Large Language Models”, presented at 8th IEEE International Forum on Research and Technologies for Society and Industry Innovation, Lecco, Italy, September 2024

- J. Li, A. Mukherjee and P. Cuffe “Guarding Code Originality and Motivating Student Engagement: In-class Simulated Microcontroller Assignments in a Stage 1 Robotics Module” case study in themed collection ‘Using Generative AI, GenAI, in T&L and Assessment in Higher Education’ January 2025

- J. Li, P. Cuffe and J. O’Donnell “From Essay to Poster: Exploring a New Format for an Energy Module Assignment” case study in themed collection ‘Using Generative AI, GenAI, in T&L and Assessment in Higher Education’ January 2025